The Goal & Plan

It took a while……Family, work, and just lack of energy to start, I just feel I had to start.

So, I created a comprehensive list of things I need to accomplish to get to, what I think will be a v1 of the system.

Before diving into the plan I want to cover the vision/goal. I’ve covered it before but I don’t think I gave it a true accounting. Or at least, honed in on what I wanted to do. So let me do that now.

What does v1 look like?

As a user I want the ability to interact, using voice commands, with a virtual persona that will look/act/respond like Armando (a/the subject). The user will ask questions and Armando will respond with factual/true responses. The user will also have the ability to ask Armando questions that Armando has not encountered and Armando will create responses that will not only build on top of the data already present but be new ideas not in the system. These responses should give the user the feeling of, “Yea I can see Armando saying that”.

Given time, this version of Armando will be its own “thing” (soul? person? being?). The system will come up with its own ideas, thoughts, responses, that would be unique and new to the system but derived from the data and networks created for this system.

From a visual point of view. The system will act and sound like the subject. Mannerism, voice, hand gestures, head movements and will use AR.

The roadmap – At a high Level

- Data Identification (Data Scientist)

- Identify data that can be extracted for use. Images, videos, comments, likes, shared content. These items can be from hosted solutions or not. What type of data can be extracted.

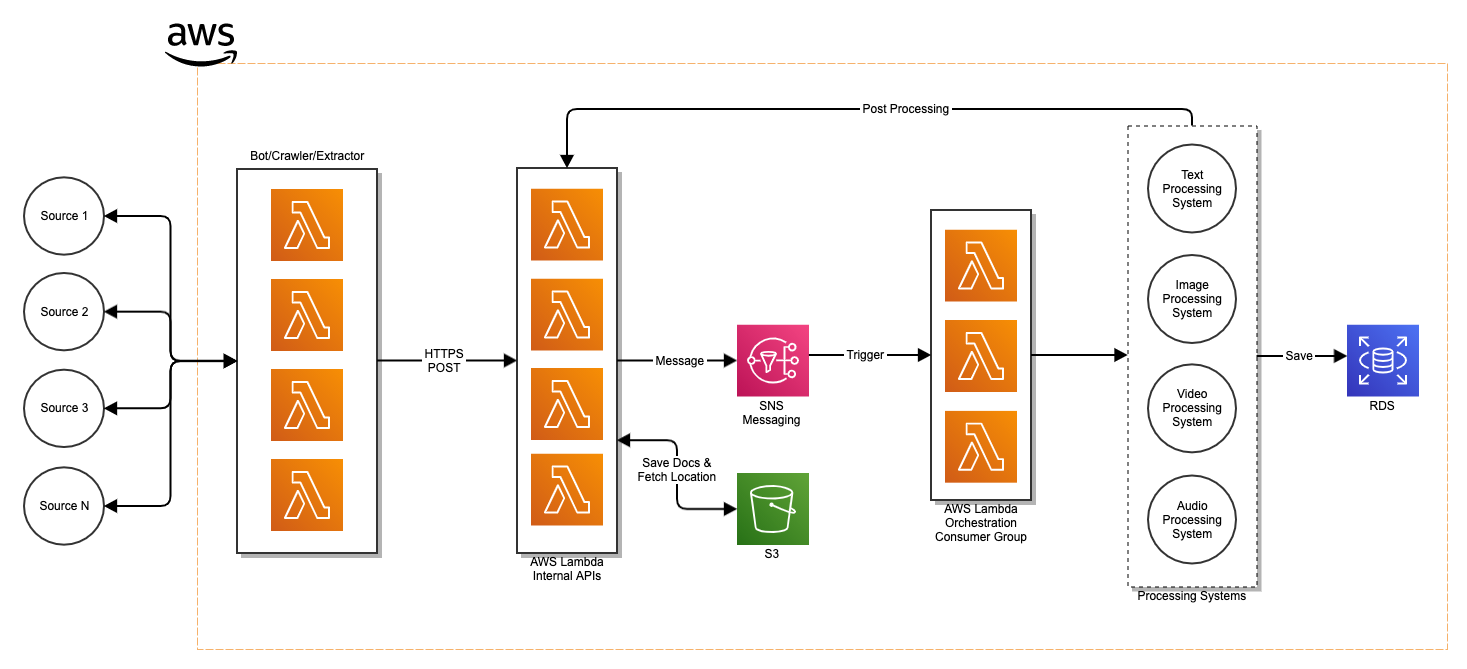

- Data Ingestion (Backend / Data Scientist)

- Architect and build services that will ingest the identified data into a data lake.

- Data Organization & Classification (Backend / Data Scientist)

- Organize the data in the data lake. How do they relate, are there any classification techniques?

- Query Data – V0.1 (Backend)

- Build the first version to query the ingested data using voice commands. Look into tools which allow for this and begin to use.

- Query Data – v0.2 (Backend)

- Hone in on results. Improve results and improve any similar responses. Will be a foundation to respond with new ideas not just canned responses.

- Build 3D modal of subject. (Front end)

- Identify tools to create models using video and or images. Then create a 3d model that looks like the subject. The 3d model should be able to move with facial movements.

- Build voice samples of subject

- Using the available recordings create voice samples of the subject to then use on responses that the subject has not recorded. Or use when the response is in written format or when a new idea is formulated and used as a response.

- Combine 3d Modal, voice samples. (Frontend/Backend)

- Mash the voice samples with the 3d model. The user will be able to ask the 3d model questions and the 3d model will return the exact same question using the subjects voice.

- Combine Data with Model. (Frontend / Backend)

- Mash the backend with the 3d Model + Voice Samples. The user will be able to ask the subject a question, the subject will fetch responses from the backend and the 3d model will respond using the voice samples.

- Move system into AR world. (Frontend)

- Create an Augmented Reality room for the 3d Model / System.

- V0.1 Done.

- At this point the user can ask simple questions. There is no inferring or new thoughts created yet.

- Create knowledge & original thought. (Backend)

- Start experimenting, reading, on what it means to create new original ideas, formulate knowledge. Then use those new ideas and constructure knowledge to respond to NEW questions not in the system.

- Architect and build out different models.

- Integrate into system (FrontEnd/Backend)

- Integrate knowledge models into the Frontend.

- V1 Done.

You know…As I’ve gone through this a number of times I’ve also noticed a few buckets that I or a team can dive in and improve on. They are:

- Data Identification & Ingestion

- Data organization & Classification.

- Query + Search.

- AI – Knowledge & Idea Creation

- 3D model improvements

I’ve also had to stop a number of times and wonder, At what point does this representation become its own person? and, Are we just a collection of experiences built on top of each other? If so where does or when does conciseness begin? What is that “spark” that causes that? When do we become self-aware? If we represent topics/subjects/experiences as nodes in a graph are the edges that connect these “like” items together knowledge? Are these edges, when aggregated, that spark?

Here we go….